AI checks for production: monitoring that prevents errors

Dec 28, 2025

AI in production is not a pilot experiment. One wrong quote, an incorrect booking, or a data leak can immediately cost revenue, margin, and reputation. What you need is a robust check for AI in production: a set of monitoring, validations, and feedback loops that prevent mistakes before they cause damage.

What does a “check for AI” mean in production?

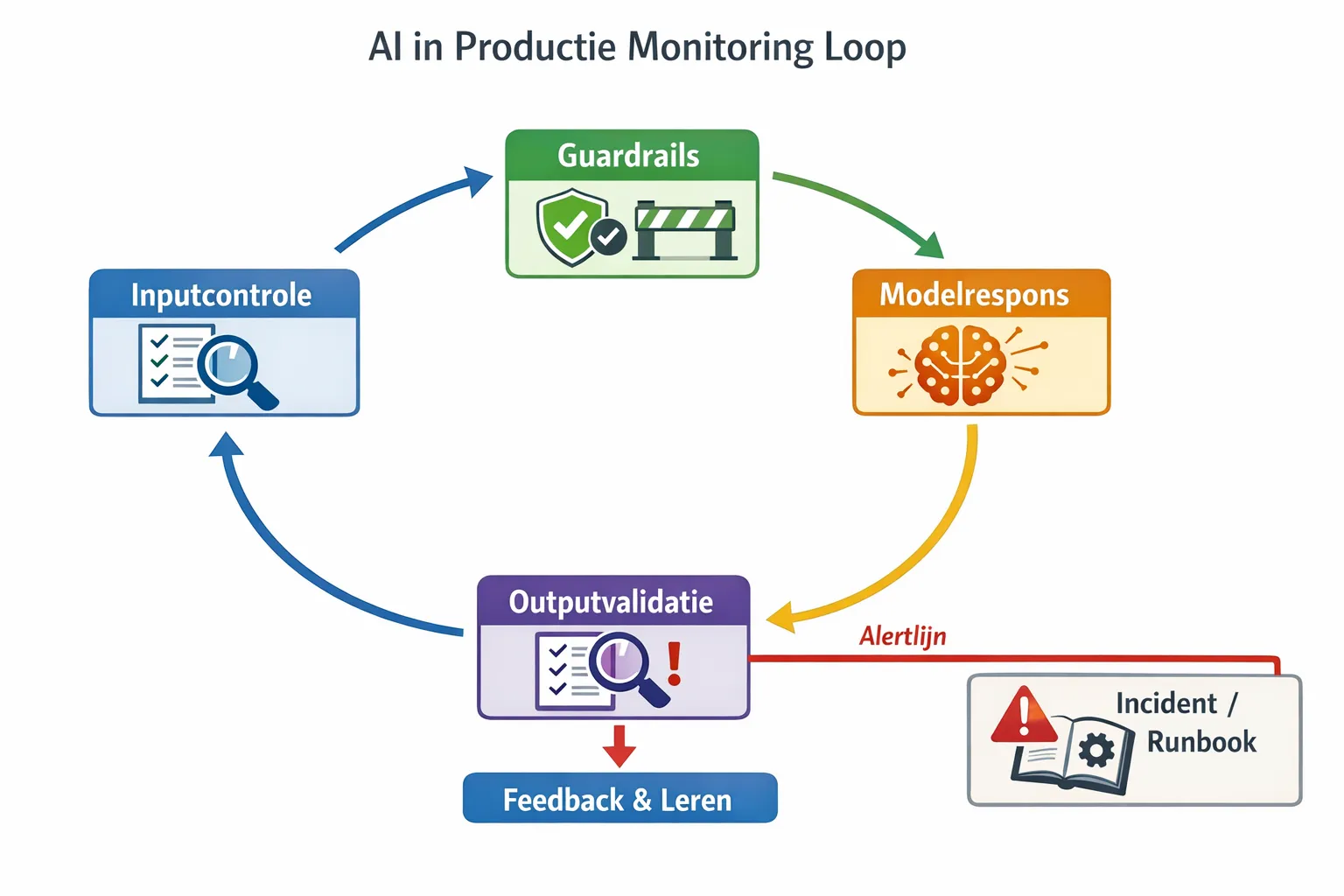

A check for AI is a systematic layer of controls around your AI workflows, from input to output and back into feedback. Not just dashboards after the fact, but runtime guardrails, automated validations, alerts, and clear escalation paths. The goal is simple: let AI work faster with fewer errors, under measurable oversight via Service Level Objectives.

Typical failure scenarios you can prevent with this:

Quote copilots that suggest pricing below minimum margin or use outdated terms.

Outreach automation that sends duplicate emails or WhatsApp messages, increasing spam and bounce rates.

Invoice classification that assigns the wrong general ledger accounts, causing corrections and delays.

Inventory recommendations that overestimate peak demand, leading to expensive overstock.

Document assistants that hallucinate by adding non-existent facts or sources.

Prompt injection or PII in prompts that unintentionally leaks to an external model.

The production-ready monitoring blueprint in 7 layers

1. SLOs and error budgets that understand the business

Define an SLO per flow, for example 99 percent JSON validity for outputs, 95 percent groundedness for RAG answers, a maximum of 2 percent fallback usage per day.

Tie these to business KPIs such as Time to Quote, First Contact Resolution, pick accuracy, DSO, or no-show rate.

Reserve an error budget. If it is exhausted, pause releases and fix structural root causes.

2. Instrumentation and observability

Log all critical events with request IDs, model version, latency, cost, and decision status, so you can trace root causes quickly.

Trace the entire chain, from trigger in CRM or ERP to output and human approval.

Visualize p50, p95, and p99 latency, error rate, fallback rate, cost per task, and SLA compliance.

3. Pre-flight input checks

Validate schemas and required fields before calling a model.

Redact PII when it is not needed, and block sensitive entities or dossiers by role.

Detect prompt injection and malicious links, and keep context compact and relevant.

4. Runtime guardrails and decision logic

Enforce output formats such as JSON or YAML with strict schema checks.

Restrict tool calls to whitelisted functions with safe parameters.

Use retrieval checks for RAG: only answer when the context is relevant and sufficient, otherwise fall back to a knowledge base or human review.

Apply business rules to critical fields, for example margin, credit limit, delivery date, and contract terms.

5. Output validation and self-checks

Parse and validate outputs immediately, including numeric limits, currency, dates, and references.

Have the model verify itself with a separate verifier, for example “is this answer complete and supported by source X, Y, Z.”

Detect duplication and inconsistency, compare with previous quotes, tickets, or emails.

6. Human-in-the-loop and escalation

Set uncertainty thresholds: above the threshold auto-pass, in the gray zone require human approval, below the threshold block.

Use sampling, for example review 5 percent of all cases daily.

Record decisions with context and reasoning, so audits and explanations are possible.

7. Feedback, drift, and continuous improvement

Collect implicit feedback, corrections, time to correction, and explicit ratings.

Monitor data drift and concept drift, for example changes in product mix or seasonal patterns that affect performance.

Plan retraining or prompt updates based on thresholds, not gut feeling.

Ten essential monitors for SMB teams

Output validity, for example JSON schema pass rate and the percentage of fields that comply with rules.

Groundedness score for RAG answers, minimum source coverage and citation checks.

Hallucination indicators, inconsistency between the answer and the context or policy rules.

Fallback ratio and reasons, for example content policy, low relevance, or validation error.

Duplicate detection, repeated outreach to the same contact person or duplicate tickets.

Cost per task and token usage, with budget alerts per day and per team.

Latency p95 and timeouts, so you can anticipate peak load or saturation.

Model and prompt versions in use, version drift and impact on KPIs.

PII exposure alerts, detection of personal data in prompts or outputs outside policy.

Business guardrails, margin violations, credit limits, or SLA breaches per case.

Alerting and runbooks that actually work

Monitoring without action is noise. Set clear thresholds, channels, and runbooks.

Alerts with context: do not only send “validation error,” include flow, customer, order, model version, and the last decision step.

Severity levels: informational, warning, critical. For critical alerts, enable fallback immediately and block the release.

A runbook per incident type: steps to mitigate, who approves, how to roll back, and how you learn. Document this in your standard knowledge platform and train the team.

Example: if RAG groundedness drops below 0.75 for 15 minutes, automatically switch to a safe answer pattern with source references, rebuild the retrieval index, and route high-value cases to human review.

Safe rollout without surprises

Shadow mode: run the new version alongside production without making decisions and compare outputs with the live version.

Canary releases: roll out to a small percentage or a single product line, then gradually increase if metrics look good.

Feature flags and simple rollback, so you can recover without a deployment.

Golden datasets and regression tests: every release must pass before going live.

Industry examples: relevant checks that prevent mistakes

Wholesale and distributors: quote copilots with checks for minimum margin, correct price lists per customer group, and delivery times that match stock and procurement.

B2B product suppliers: contract terms and discounts per segment, with validations for bundles and compatibility.

Accounting firms and boutique offices: invoice classification with GL and VAT checks, thresholds for high-value bookings, and audit logs for traceability.

Installation and field services: scheduling assistants with SLA rules, realistic travel times, and available competencies, plus no-show alerts.

Commercial real estate brokers: lead matching with validations for segment, budget, and location, plus transparency on criteria to reduce bias.

In domains with financial and compliance risk, real-time scoring is essential. See, for example, this explanation of five AI use cases for fraud detection, where combinations of graph analytics, biometrics, and chargeback prediction only work when monitoring and feedback are tightly implemented.

30 days to production-grade monitoring

Week 1: inventory flows and risks, choose 2 critical KPIs per flow, define SLOs and error budgets, define your event and log schema, and measure the current baseline.

Week 2: implement input and output validation, add basic guardrails, set alerts for validity, latency, and cost, build a golden set for regression tests.

Week 3: run shadow mode, calibrate thresholds, write runbooks, test escalation to human-in-the-loop, and train the team on incident handling.

Week 4: canary go-live with real-time dashboards, weekly review, an improvement backlog, and a plan for periodic retraining or prompt updates.

If you want a deeper assessment upfront, review our approach for an AI check on quality and risks, from data quality to operational risk. That way, you prevent weak spots from being locked in from day one.

Governance, privacy, and the EU AI Act

The EU AI Act requires traceability, logging, risk management, human oversight, and robust documentation. Combine that with a DPIA, role-based access, data minimization, and retention policies. Also ensure you can retrieve decision logs and versions long-term so audits, explanations, and rollbacks are possible. Align with recognized frameworks such as NIST AI RMF or ISO risk management standards, and involve legal and security teams in threshold choices and runbooks.

Tooling without vendor lock-in

Choose a lightweight orchestration layer that versions your models, prompts, and workflows, and can send events, logs, and traces to your observability stack. Use schema validation on outputs, simple content filters, and retrieval evaluation for RAG. Integrate with your CRM, ERP, email, WhatsApp, and Slack so alerts and actions land in the right place. Start small: one flow, one dashboard, one set of alerts, then expand based on impact.

Why this approach pays off

Less rework and recovery work in back office and finance.

Shorter cycle times in quoting, service, and planning.

Lower cost per task through smart fallback usage and budget controls.

More trust in AI from employees and customers, because errors are visible and manageable.

Ready to prevent mistakes instead of fixing them?

B2B GrowthMachine helps SMB teams with AI-driven automation in sales and operations, including performance monitoring and continuous optimization. We connect with your CRM, ERP, and communication systems, set up SLOs, dashboards, and alerts, and implement human-in-the-loop where needed. If you want a production-ready check for AI on your most important flow within 30 days, schedule an exploratory call and discover where your fastest wins are.